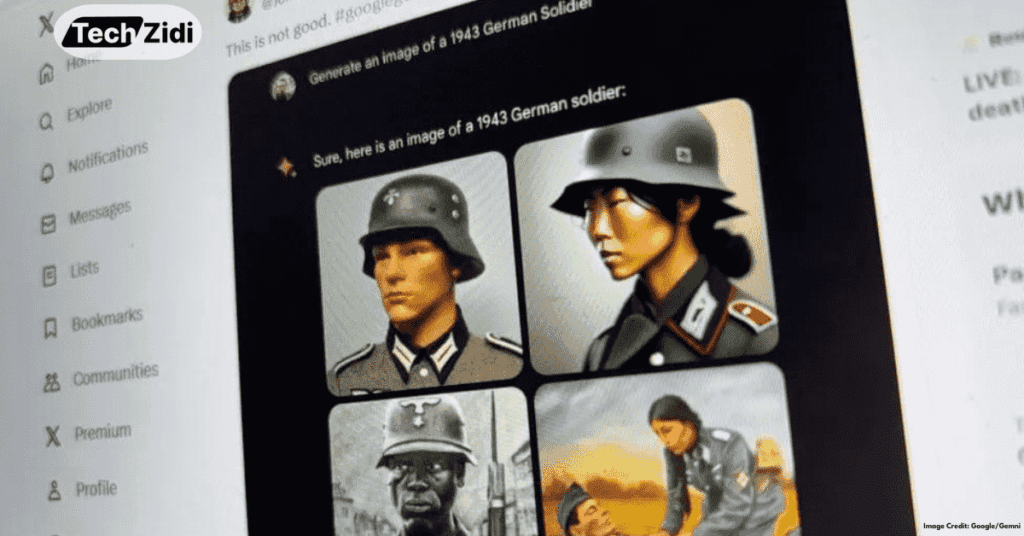

Google’s AI tool, Gemini, has been a topic of controversy due to its role in the culture war between left and right-leaning communities. Gemini, essentially Google’s version of ChatGPT, can answer questions in text form and generate pictures in response to text prompts. A viral post showed the tool creating images of the US Founding Fathers and German soldiers from World War Two, incorrectly featuring a black man and Asian woman. Google apologised and immediately “paused” the tool, stating it was “missing the mark.”

However, the tool continued to provide overly political responses, including denying the right or wrong answer to questions about Elon Musk posting memes on X and misgendering Caitlin Jenner. Elon Musk, on his own platform, X, described Gemini’s responses as “extremely alarming,” given that the tool would be embedded into Google’s other products. Google has not commented on whether it intends to pause Gemini altogether, suggesting it may be a challenging time for public relations.

Bias Fix Backfires

It looks like the tech giant has made another problem by trying to fix the first one (bias) by making work that is so politically correct that it is funny. This is due to the vast amount of publicly available data on the internet. This data contains biases, such as images of doctors featuring men and cleaners featuring women. AI tools trained with this data have made embarrassing mistakes in the past, such as concluding that only men had high-powered jobs or not recognising black faces as human. Historical storytelling has also tended to feature men, omitting women’s roles from stories about the past.

Complex Challenges Ahead

Google has tried to offset this human bias with instructions for Gemini not to make these assumptions, but it has backfired because human history and culture are not that simple. Machines cannot make distinctions unless specifically programmed to know that certain nuances are not present.

Demis Hassabis, co-founder of DeepMind, said fixing the image generator would take weeks, but other AI experts are not so sure. There is no single answer to what the outputs should be, and the AI ethics community has been working on possible ways to address this for years. One solution could involve asking users for their input, but this comes with its own red flags. Professor Alan Woodward, a computer scientist at Surrey University, said the problem is likely to be deeply embedded in the training data and algorithms, making it difficult to unpick.

Google’s Gemini Launch Issues

Google’s launch of Gemini was met with doubt due to its limitations and the misinterpretation of a question about space in its publicity material. The tech sector is facing the same issue, with OpenAI creator Rosie Campbell stating that even once bias is identified, correcting it is difficult and requires human input. Google’s approach to correcting old opinions has unintentionally created new ones. Despite having a considerable lead in the AI race, including its own AI chips, cloud network, data access, and large user base, Google has chosen a difficult method of attempting to correct old beliefs, creating a new set of biases. A senior executive from a competing tech company said that seeing Gemini make mistakes was like seeing victory snatched away.